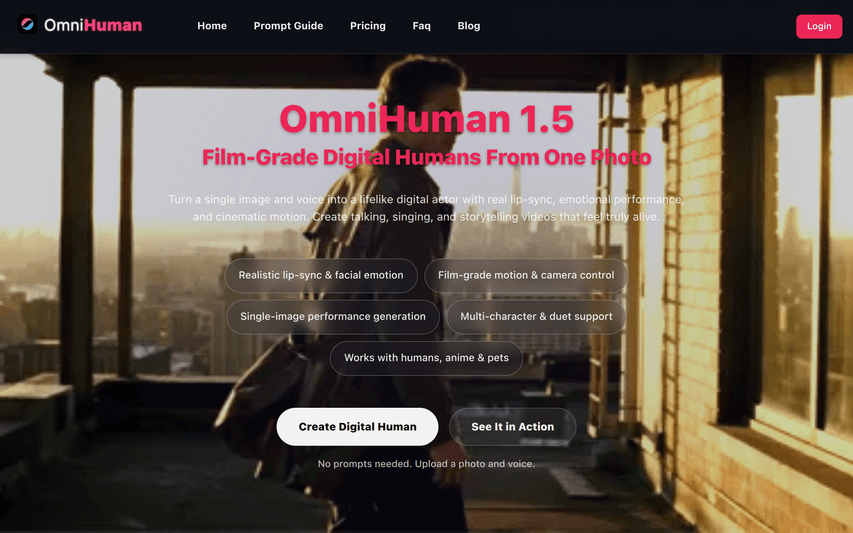

OmniHuman‑1.5

OmniHuman-1.5 is an AI model that generates film-grade videos from a single image, audio, and optional text prompts. It supports multi-character scene

About

OmniHuman-1.5 is an advanced AI model that creates film-grade digital human videos from just a single image, audio clip, and optional text prompts. It excels at precise lip-sync, natural emotional expressions, and dynamic gestures, while supporting multi-character scenes and free camera control. Versatile by design, it adapts seamlessly to humans, animals, and stylized avatars (e.g., anime or mascots), delivering polished, production-ready content in minutes—ideal for storytelling, marketing, education, and virtual content creation.

Key Features

Single‑Image Performance Generation

Create full, film‑grade video performances from just one image (portrait, anime, or pet) — no multi‑frame input required.

Realistic Lip‑Sync & Emotional Acting

Precision lip synchronization with audio-driven emotional expressions, breathing, natural pauses and dramatic intent for believable performances.

Multi‑Character Scenes & Voice Routing

Support for duets and group scenes with separate audio tracks routed to different characters in a single frame for natural interactions.

Cinematic Motion & Free Camera Control

Film-grade motion generation with adjustable camera movements and framing to produce production-ready shots.

Cross‑Style Compatibility

Works with real humans, stylized avatars (anime/mascot), and animals, maintaining consistent expression and motion across styles.

How to Use OmniHuman‑1.5

1) Upload a clear single image (portrait, anime art, or pet). Use a high‑quality JPG for best results. 2) Upload an audio clip (voice, narration, or song). OmniHuman analyzes tone and rhythm for lip‑sync and emotion. 3) (Optional) Add text prompts to guide camera moves, actions, styling or specific gestures. 4) Generate a preview, fine‑tune with prompts as needed, then download the final film‑grade video.