Moterra AI

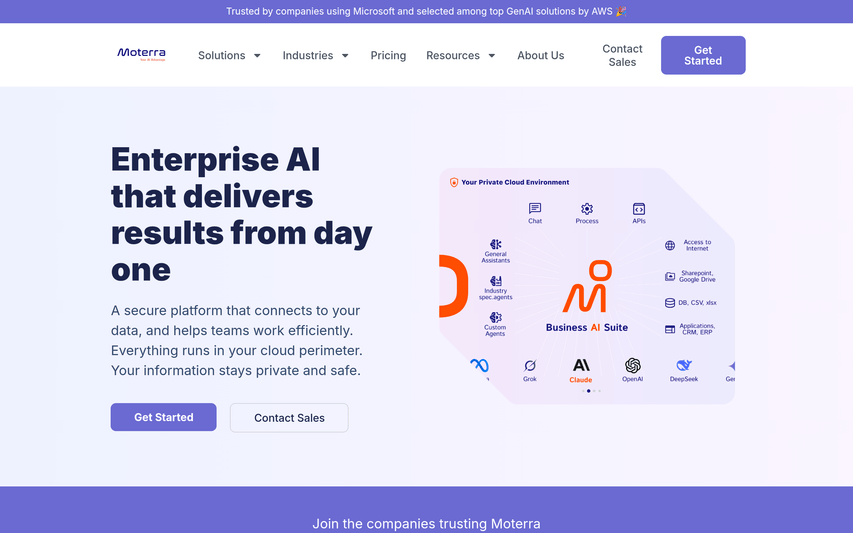

Moterra is an enterprise AI tool suite that connects to your company's data. It helps teams work more efficiently and securely within your own cloud.

About

Moterra provides an enterprise-grade suite of AI assistants for core business tasks that operate within your cloud. It helps teams with internal knowledge search, content writing, data analysis, and document comparison. By connecting directly to your company's documents and systems, Moterra delivers accurate, reliable and context-aware answers with citations. Eliminating data exposure risks and ensuring full compliance, it's the secure way to leverage AI for tangible business results.

Key Features

AI Internal Knowledge Assistant

Search company files and get clear answers from your own documents.

AI Content Writing Assistant

Draft proposals, reports, and emails faster while staying compliant with GDPR and ISO standards.

AI Data Analyst

Ask business questions in plain language and get instant answers with charts and insights.

AI Document Comparison Assistant

Compare contracts, policies, or RFPs and identify changes in seconds.

How to Use Moterra AI

To get started contact us. You will be provided a login to the tool. Setup takes days, not months. Our AI specialists will customise everything to your templates for maximum impact.

Use Cases

Frequently Asked Questions

Q: Will your AI expose our confidential data?

A: Never. Moterra runs entirely in your own cloud environment, and data never leaves your control. Unlike public AI tools, your files aren’t used to train external models and remain fully private and compliant.

Q: What makes Moterra different from other “AI platforms”?

A: Most platforms give you one chatbot and keep your data on their servers. Moterra is different. It runs inside your cloud perimeter. You can build task-specific agents, run enterprise search, and stay compliant with GDPR and ISO standards.

Q: What technologies are behind Moterra’s solution?

A: Moterra uses generative AI with large language models (Anthropic Claude via Amazon Bedrock). Answers are grounded by retrieval over your documents (RAG), applying NLP across structured and unstructured data. On AWS it runs in your VPC with PrivateLink and customer-managed KMS keys. Models are reached via private endpoints and never train on your data.