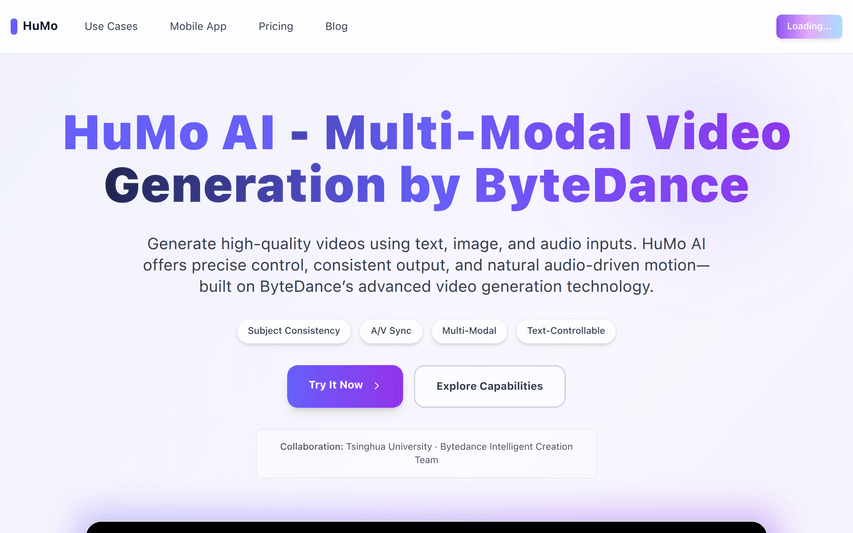

Humo AI

Multi-modal input, human-centric video with consistent subject & audio-visual sync

About

Supports multi-modal input (text/image/audio) with three modes (TI/TA/TIA), enabling human-centric videos with consistent subjects, audio-visual sync and text-controllable adjustments.

Key Features

Multi‑modal Input (TI / TA / TIA)

Support for Text+Image, Text+Audio, and Text+Image+Audio modes so you can condition generation with prompts, reference images, and/or speech depending on the use case.

Subject Consistency & Identity Preservation

Keeps the same person or subject consistent across outputs while allowing appearance and outfit edits via text prompts.

Accurate Audio‑Visual Sync & Lip‑Sync

Produces natural lip motion and facial expressions that align to supplied audio for believable dialogue, dubbing, and voice‑driven animation.

Text‑Controllable Scene & Style Editing

Adjust outfits, hairstyles, backgrounds, camera framing and actions through prompts for fast iterative creative control.

How to Use Humo AI

1) Choose generation mode: TI (Text+Image), TA (Text+Audio) or TIA (Text+Image+Audio). 2) Upload a reference image (optional) and/or audio file if you need identity preservation or lip‑sync. 3) Enter a detailed text prompt describing the scene, actions, style, and any appearance edits. 4) Click Generate, review the output, then refine the prompt or assets and re‑generate until satisfied. Download the final video when ready.