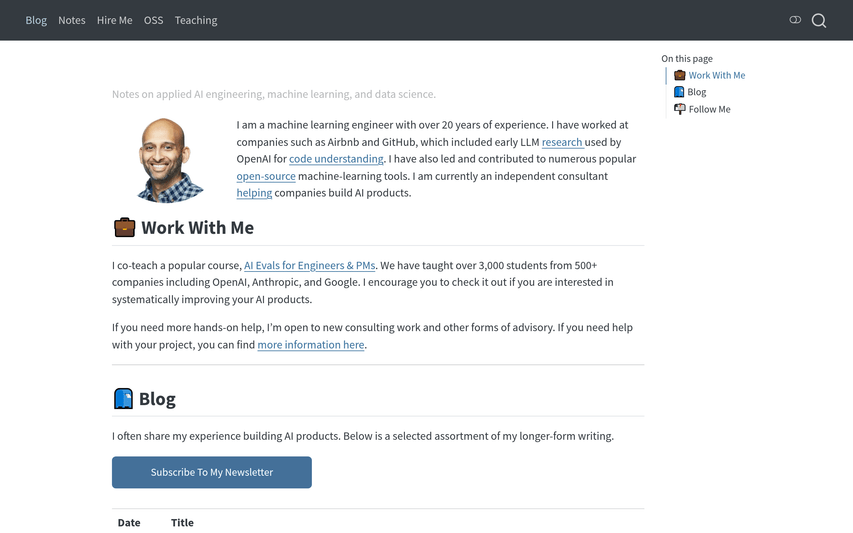

Hamel Husain

This blog serves as a professional journal for advanced AI exploration, where the author discusses evaluation systems for LLMs, large machine learning

About

This blog provides detailed writing on artificial intelligence, concentrating on assessment systems for AI products, LLM best practices, and practical machine learning workflows. With articles covering methods like error analysis, synthetic data creation, and useful ML tooling, the content balances research, engineering expertise, and implementable advice for developers, product managers, and data scientists alike.

Key Features

In-depth LLM evals and guides

Long-form, practical articles that explain evaluation systems, using LLMs as judges, adversarial validation, and other methods to measure and improve model behavior.

Applied ML engineering tutorials

Step-by-step posts on production ML workflows, debugging, tokenization gotchas, latency optimization, and tools for shipping reliable AI products.

Open-source tooling and resources

Notes, demos, and links to OSS projects and libraries (e.g., Inspect AI, nbsanity, ghapi) that the author has built or recommends for building and evaluating AI systems.

Course & consulting offerings

Access to an instructor-led course (AI Evals for Engineers & PMs) and information on hiring the author for consulting or advisory engagements.

Newsletter and curated updates

Option to subscribe to a newsletter to receive new posts, course announcements, and curated insights about building with LLMs.

How to Use Hamel Husain

1) Browse the blog homepage or use the archive to find articles on evaluation, LLM best practices, and tooling. 2) Read detailed guides and follow links to referenced OSS projects or code samples for hands-on experiments. 3) Subscribe to the newsletter to receive new posts and updates. 4) If you need help applying the material, follow the 'Work With Me' / consulting links to inquire about the course or advisory services.